Docker简介

问题引入:当我们在本机开发好应用程序以后需要发布到服务器,但是服务器并非和我们本机系统有着一样的运行环境,如缺少数据库、缺少各种依赖包、依赖软件版本过低等等问题,需要花很长时间重新在服务器配置好运行环境,得不偿失.那么有没有方法能够将我们开发程序时本机上所有的运行环境打包,直接部署到服务器上程序就能运行的机制呢?

Docker 让开发者可以打包他们的应用以及依赖包到一个可移植的容器中,然后发布到任何流行的 Linux 机器上,Docker是一个开源的应用容器引擎,基于Go语言,并遵从Apache2.0协议开源.容器是完全使用沙箱机制,相互之间不会有任何接口(类似iPhone的 app),更重要的是容器性能开销极低.[来源网络]

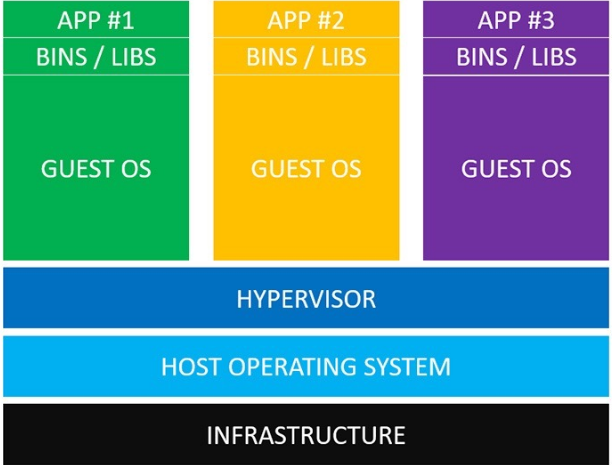

通过上述定义可以知道,Docker似乎类似于虚拟机,可以在Docker中打包安装任何应用并运行,比如tomcat、Java、mysql等.但它并不等同于虚拟机,为了区分Docker和虚拟机,我们先来了解虚拟机的运行机制,如下图所示:

基础设施(Infrastructure):个人电脑,数据中心的服务器,或者是云主机

操作系统(Host Operating System):MacOS,Windows或者Linux发行版

虚拟机管理系统(Hypervisor):virtualBox,VMWare

从操作系统(Guest Operating System):运行在virtualBox,VMWare中的虚拟机

依赖(bins/libs):安装python,mysql等需要的依赖项

应用(APP):Web 应用、后台应用、数据库应用等

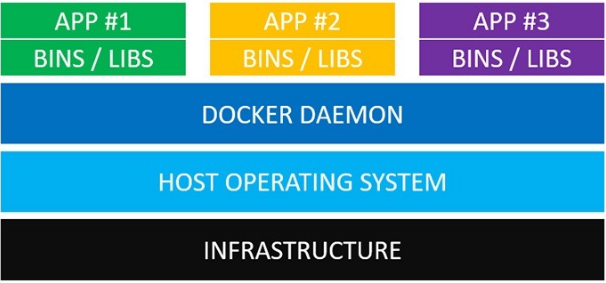

可以看出若隔离不同的应用,需要安装并启动不同的虚拟机,虚拟机的启动速度慢而且开销极大.Docker容器运行的应用不仅处于隔离状态,而且启动速度极快(毫秒级),而且开销极小.下图为Docker的运行机制:

基础设施(Infrastructure):个人电脑,数据中心的服务器,或者是云主机

操作系统(Host Operating System):Linux

Docker守护进程(Docker Daemon):Docker守护进程取代了Hypervisor,运行在操作系统之上的后台进程,负责管理Docker容器,等同于virtualBox,VMWare

依赖(bins/libs):应用的所有依赖都打包在Docker镜像中,Docker容器是基于Docker镜像创建的

应用(APP):应用的源代码及其依赖都打包在Docker镜像中,不同的应用需要不同的Docker镜像.不同的应用运行在不同的Docker容器中,它们是相互隔离的

Docker守护进程可以直接与主操作系统进行通信,为各个Docker容器分配资源,它还可以将容器与主操作系统隔离,并将各个容器互相隔离。

虚拟机启动需要数分钟,而Docker容器可以在数毫秒内启动,由于没有臃肿的从操作系统,Docker可以节省大量的磁盘空间以及其他系统资源。

Docker安装(基于Ubuntu)

在创建Docker容器前需要先安装Docker容器引擎,类似于虚拟机的virtualBox或VMWare.

#查看内核版本 docker安装需要大于3.1

$ uname -r

4.13.0-36-generic

#更新软件源

$ sudo apt-get update

$ sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

$ sudo apt-key fingerprint 0EBFCD88

$ sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

#安装docker

$ sudo apt-get update

$ sudo apt-get install docker-ce docker-ce-cli containerd.io

#查看安装的docker版本(无错则安装成功)

$ docker --version

Docker version 19.03.13, build 4484c46d9d

#运行hello-wolrd实例(该命令运行时会从镜像仓库中拉取docker镜像)

$ sudo docker run hello-world

latest: Pulling from library/hello-world

9bb5a5d4561a: Pull complete

Digest: sha256:f5233545e43561214ca4891fd1157e1c3c563316ed8e237750d59bde73361e77

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

....

#查看已有的镜像

$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest e38bc07ac18e 2 months ago 1.85 kB

#hello-world即从镜像仓库中拉取的镜像 docker run hello-world:latest 启动容器

#更新国内镜像

$ sudo vim /etc/docker/daemon.json

{

"registry-mirrors": [

"http://hub-mirror.c.163.com"

]

}

$ sudo systemctl daemon-reload

$ sudo systemctl restart docker

容器和镜像

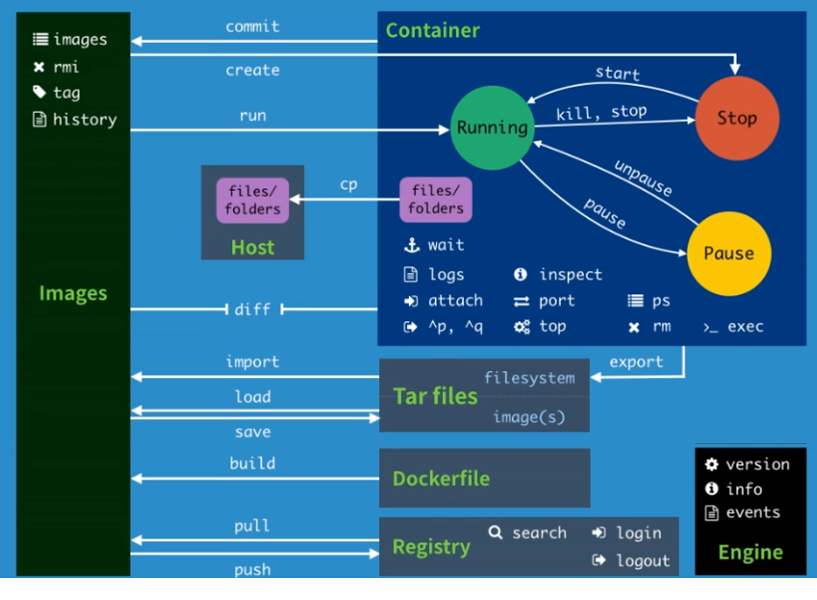

如下图所示,容器是由镜像实例化而来,类似于面向对象的概念,镜像看作类,容器则为类实例化后的对象.一个Docker镜像可以构建于另一个Docker镜像之上,好比类的继承机制.

docker的镜像概念类似虚拟机的镜像,是一个只读的模板,一个独立的文件系统,包括运行容器所需的数据,且可以用来创建新的容器(docker create

docker利用容器来运行应用,docker容器是由docker镜像创建的运行实例,docker容器类似利用.iso文件安装后的虚拟机,可以执行各种读写操作,每个容器间是相互隔离的,容器中会运行特定的运用,包含特定应用的代码及所需的依赖文件,可以把容器看作一个简易版的linux环境.可以用同一个镜像启动多个Docker容器.

https://docs.docker.com/engine/reference/commandline/docker/

docker命令

#查看已有的镜像

$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest e38bc07ac18e 2 months ago 1.85 kB

--all , -a Show all images (default hides intermediate images)

--digests Show digests

--filter , -f Filter output based on conditions provided

--format Pretty-print images using a Go template

--no-trunc Don’t truncate output

--quiet , -q Only show numeric IDs

#搜索镜像

$ sudo docker search mysql

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

mysql MySQL is a widely used, open-source relation… 10202 [OK]

mariadb MariaDB is a community-developed fork of MyS… 3753 [OK]

mysql/mysql-server Optimized MySQL Server Docker images. Create… 744 [OK]

#拉取镜像

$ sudo docker pull mysql

Using default tag: latest

latest: Pulling from library/mysql

852e50cd189d: Pull complete

29969ddb0ffb: Pull complete

a43f41a44c48: Pull complete

5cdd802543a3: Pull complete

b79b040de953: Pull complete

938c64119969: Pull complete

7689ec51a0d9: Pull complete

a880ba7c411f: Pull complete

984f656ec6ca: Pull complete

9f497bce458a: Pull complete

b9940f97694b: Pull complete

2f069358dc96: Pull complete

Digest: sha256:4bb2e81a40e9d0d59bd8e3dc2ba5e1f2197696f6de39a91e90798dd27299b093

Status: Downloaded newer image for mysql:latest

docker.io/library/mysql:latest

#删除镜像

$ sudo docker rmi 容器ID

Untagged: hello-world:latest

Untagged: hello-world@sha256:e7c70bb24b462baa86c102610182e3efcb12a04854e8c582838d92970a09f323

Deleted: sha256:bf756fb1ae65adf866bd8c456593cd24beb6a0a061dedf42b26a993176745f6b

Deleted: sha256:9c27e219663c25e0f28493790cc0b88bc973ba3b1686355f221c38a36978ac63

#--------------------------------------------------------------#

$ docker run [OPTIONS] IMAGE[:TAG|@DIGEST] [COMMAND] [ARG...]

--name string 指定容器名字

-it 交互方式启动

-p, --publish list 端口映射,-p 主机端口:容器端口; -p 容器端口(主机端口随机)

-P, --publish-all 端口随机

#启动容器

$ docker run hello-world #run 创建基于该镜像的容器并启动

#启动指定版本容器

$ docker run hello-world:latest

#查看已启动的容器

$ docker ps

#查看存在的容器

$ docker ps -a

#启动已存在的容器

$ docker start 容器ID

#启动容器(守护方式/后台启动)

$ docker run -d 容器ID

$ docker start -d 容器ID

#重新进入当前容器

$ docker attach 容器ID

#运行的容器中启动新进程

$ docker exec -i -t 容器ID /bin/bash

#停止守护容器

$ docker stop 容器ID #等待容器停止

$ docker kill 容器ID #立即停止容器

#删除容器

$ docker rm 容器ID

#容器端口映射

docker -P / docker --publish-all=true|false #映射所有端口

$ docker run -P -i -t ubuntu #宿主机端口随机

docker -p #映射指定端口

$ docker run -p 80 -i -t ubuntu #宿主机端口随机

$ docker run -p 8080:80 -i -t ubuntu #宿主机端口:容器端口

$ docker run -p 0.0.0.0:80 -i -t ubuntu #宿主机ip:容器端口

$ docker run -p 0.0.0.0:8080:80 -i -t ubuntu #宿主机ip:宿主机端口:容器端口

#--------------------------------------------------------------#

#查看容器详细信息

$ sudo docker inspect [OPTIONS] NAME|ID [NAME|ID...]

#--------------------------------------------------------------#

#查看容器日志

$ sudo docker logs [OPTIONS] CONTAINER

--details Show extra details provided to logs

--follow , -f Follow log output

--since Show logs since timestamp (e.g. 2013-01-02T13:23:37) or relative (e.g. 42m for 42 minutes)

--tail all Number of lines to show from the end of the logs

--timestamps , -t Show timestamps

#--------------------------------------------------------------#

#查看容器进程信息

$ sudo docker top CONTAINER [ps OPTIONS]

#--------------------------------------------------------------#

#容器/主机文件拷贝

$ sudodocker cp [OPTIONS] CONTAINER:SRC_PATH DEST_PATH|-

$ sudodocker cp [OPTIONS] SRC_PATH|- CONTAINER:DEST_PATH

#--------------------------------------------------------------#

#可视化图形工具:Portainer,https://www.portainer.io/installation/

#--------------------------------------------------------------#

#根据当前容器状态 创建新的镜像

docker commit -m='ssh install' -a='jiaopan' cb57c338f1c9 kyleson/jpos

#-m 说明文字

#-a 作者

#cb57c338f1c9 容器ID

#kyleson/jpos 镜像名称

#-p 提交时暂停容器

#上传镜像到docker hub

$ sudo docker login

$ sudo docker push kyleson/jpos

docker容器数据卷

$ sudo docker run -v /home/test:/home -it centos /bin/bash

--volume , -v Bind mount a volume # -v 宿主机目录:容器目录

#目录绑定成功信息

$ sudo docker inspect 容器id

"Mounts": [

{

"Type": "bind",

"Source": "/home/test",

"Destination": "/home",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

]

#note:主机/home/test和容器内的/home目录数据双向同步

#容器间的数据共享

--volumes-from Mount volumes from the specified container(s)

$ sudo docker run --volumes-from 777f7dc92da7 -i -t ubuntu pwd

DockerFile

FROM centos

MAINTAINER jiaopaner<jiaopaner@qq.com>

ENV PATH /home

WORKDIR $PATH

RUN yum install vim

RUN yum install net-tools

EXPOSE 80

CMD echo $PATH

CMD echo "----------end---------"

CMD /bin/bash

$ sudo docker build -f dockerfile -t jp-centos .

Sending build context to Docker daemon 2.048kB

Step 1/8 : FROM centos

---> 0d120b6ccaa8

Step 2/8 : MAINTAINER jiaopaner<jiaopaner@qq.com>

---> Running in 0f054a284458

Removing intermediate container 0f054a284458

---> 350dafb870cf

Step 3/8 : ENV PATH /home

---> Running in 86e71faf7d39

Removing intermediate container 86e71faf7d39

---> ce30199ce12b

Step 4/8 : WORKDIR $PATH

---> Running in 68ee5a3cc62a

Removing intermediate container 68ee5a3cc62a

---> 9142ba699de8

Step 5/8 : EXPOSE 80

---> Running in 7b1d81ba8276

Removing intermediate container 7b1d81ba8276

---> 2c1c922e9eec

Step 6/8 : CMD echo $PATH

---> Running in 9137d52fbda2

Removing intermediate container 9137d52fbda2

---> ffce4c2caec7

Step 7/8 : CMD echo "----------end---------"

---> Running in 61d1d5af663c

Removing intermediate container 61d1d5af663c

---> 6eeb9214b922

Step 8/8 : CMD /bin/bash

---> Running in 48b3775713e7

Removing intermediate container 48b3775713e7

---> 1536cb9c9daa

Successfully built 1536cb9c9daa

Successfully tagged jp-centos:latest

$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

jp-centos latest 1536cb9c9daa About a minute ago 215MB

#jdk8

FROM centos

MAINTAINER jiaopaner

ADD jdk-8u191-linux-x64.tar.gz /usr/local/

ENV JAVA_HOME /usr/local/jdk1.8.0_191/

ENV PATH $JAVA_HOME/bin:$PATH

ENTRYPOINT ["java","-version"]

docker网络

$ ip addr

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:12:01:fd:bc brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

#查看容器内部ip地址

$ sudo docker exec -it 容器id ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

#查看docker网络

$ sudo docker network ls

NETWORK ID NAME DRIVER SCOPE

3b234a9aa88b bridge bridge local

d07463d88601 host host local

5c0b56f3d183 none null local

#自定义网络

$ sudo docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

$ sudo docker network ls

NETWORK ID NAME DRIVER SCOPE

3b234a9aa88b bridge bridge local

0217c6ae906e mynet bridge local

$ sudo docker run -it -d --name mynet-os --net mynet centos

$ sudo docker exec -it d9d07c8f46cf ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

7: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.0.2/16 brd 192.168.255.255 scope global eth0

valid_lft forever preferred_lft forever

#自定义网络可通过容器名ping通网络

$ sudo docker run -it -d --name mynet-os02 --net mynet centos

sudo docker exec -it mynet-os ping mynet-os02

PING mynet-os02 (192.168.0.3) 56(84) bytes of data.

64 bytes from mynet-os02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.064 ms

64 bytes from mynet-os02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.072 ms

64 bytes from mynet-os02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.072 ms

64 bytes from mynet-os02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.072 ms

#容器连到其它子网

docker network connect [OPTIONS] NETWORK CONTAINER

--alias Add network-scoped alias for the container

--driver-opt driver options for the network

--ip IPv4 address (e.g., 172.30.100.104)

--ip6 IPv6 address (e.g., 2001:db8::33)

--link Add link to another container

--link-local-ip Add a link-local address for the container

$ sudo docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

518839a304cc centos "/bin/bash" 9 minutes ago Up 9 minutes mynet-os02

d9d07c8f46cf centos "/bin/bash" 11 minutes ago Up 11 minutes mynet-os

d32dd57bf19e centos "/bin/bash" 47 minutes ago Up 47 minutes interesting_davinci

$ sudo docker network connect mynet interesting_davinci

$ sudo docker exec -it mynet-os ping interesting_davinci

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

11: eth1@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.0.4/16 brd 192.168.255.255 scope global eth1

valid_lft forever preferred_lft forever

redis集群部署

#redis网络创建

$ sudo docker network create redis-net --subnet 192.160.0.0/16

redis配置脚本

for port in $(seq 1 6);

do

mkdir -p /home/jiaopan/test/redis/node-${port}/conf

touch /home/jiaopan/test/redis/node-${port}/conf/redis.conf

cat << EOF >/home/jiaopan/test/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

Cluster-announce-ip 192.160.0.1${port}

Cluster-announce-port 6379

Cluster-announce-bus-port 16379

appendonly yes

EOF

done

启动redis容器脚本

for port in $(seq 1 6);

do

sudo docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-0${port} -v /home/jiaopan/test/redis/node-${port}/data:/data -v /home/jiaopan/test/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf -d --net redis-net --ip 192.160.0.1${port} redis redis-server /etc/redis/redis.conf

done

$ bash redis-start.sh

Unable to find image 'redis:latest' locally

latest: Pulling from library/redis

852e50cd189d: Pull complete

76190fa64fb8: Pull complete

9cbb1b61e01b: Pull complete

d048021f2aae: Pull complete

6f4b2af24926: Pull complete

1cf1d6922fba: Pull complete

Digest: sha256:5b98e32b58cdbf9f6b6f77072c4915d5ebec43912114031f37fa5fa25b032489

Status: Downloaded newer image for redis:latest

5be42fac290d85699ded763bb7fd9b03fe30285648b083916565fd2ecc140306

b6beb0cb73c7e6bfc9438153fa2acf6001ac9093e5a182bf9e8ba7ef92fd9c1d

3c7fec0bc0ac5cc699258c359f6d6252bfbf5a5967f7a6cd9e7e4d2f6ad572aa

160b55fd9a9dfc3daaf337c7d4b1e5c094e879ebc1a339198a9c565a45fae124

45028522de12e7d3aa072322da0d6f2e5b4fda8552fe5977a8439dbfd83aae6e

99a37fe7e370cd262be8d411b38032ac5b12c470931aa4cbaa47d60532fa82fc

$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

99a37fe7e370 redis "docker-entrypoint.s…" 43 seconds ago Up 41 seconds 0.0.0.0:6376->6379/tcp, 0.0.0.0:16376->16379/tcp redis-06

45028522de12 redis "docker-entrypoint.s…" 43 seconds ago Up 42 seconds 0.0.0.0:6375->6379/tcp, 0.0.0.0:16375->16379/tcp redis-05

160b55fd9a9d redis "docker-entrypoint.s…" 44 seconds ago Up 42 seconds 0.0.0.0:6374->6379/tcp, 0.0.0.0:16374->16379/tcp redis-04

3c7fec0bc0ac redis "docker-entrypoint.s…" 44 seconds ago Up 43 seconds 0.0.0.0:6373->6379/tcp, 0.0.0.0:16373->16379/tcp redis-03

b6beb0cb73c7 redis "docker-entrypoint.s…" 45 seconds ago Up 43 seconds 0.0.0.0:6372->6379/tcp, 0.0.0.0:16372->16379/tcp redis-02

5be42fac290d redis "docker-entrypoint.s…" 46 seconds ago Up 44 seconds 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-01

$ sudo docker exec -it redis-01 /bin/sh

# ls

appendonly.aof nodes.conf

#创建集群

# redis-cli --cluster create 192.160.0.11:6379 192.160.0.12:6379 192.160.0.13:6379 192.160.0.14:6379 192.160.0.15:6379 192.160.0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.160.0.15:6379 to 192.160.0.11:6379

Adding replica 192.160.0.16:6379 to 192.160.0.12:6379

Adding replica 192.160.0.14:6379 to 192.160.0.13:6379

M: 2511c99b36e7332e780e1a99d3800b5118cda204 192.160.0.11:6379

slots:[0-5460] (5461 slots) master

M: ff3d43f7a35176117f1cf87b8e7bb4293d36cdea 192.160.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 24b37dae575d40028c7e11b9f2d9d36ba8615ac2 192.160.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 612a061d020c6cfce795e0caee85f8ada13a1951 192.160.0.14:6379

replicates 24b37dae575d40028c7e11b9f2d9d36ba8615ac2

S: a45877d8533abda256dacd947333b857311e015a 192.160.0.15:6379

replicates 2511c99b36e7332e780e1a99d3800b5118cda204

S: c4b5e4b5bd9bdac83340686aab4caf80412d9adf 192.160.0.16:6379

replicates ff3d43f7a35176117f1cf87b8e7bb4293d36cdea

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

>>> Performing Cluster Check (using node 192.160.0.11:6379)

M: 2511c99b36e7332e780e1a99d3800b5118cda204 192.160.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: c4b5e4b5bd9bdac83340686aab4caf80412d9adf 192.160.0.16:6379

slots: (0 slots) slave

replicates ff3d43f7a35176117f1cf87b8e7bb4293d36cdea

S: 612a061d020c6cfce795e0caee85f8ada13a1951 192.160.0.14:6379

slots: (0 slots) slave

replicates 24b37dae575d40028c7e11b9f2d9d36ba8615ac2

M: ff3d43f7a35176117f1cf87b8e7bb4293d36cdea 192.160.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 24b37dae575d40028c7e11b9f2d9d36ba8615ac2 192.160.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: a45877d8533abda256dacd947333b857311e015a 192.160.0.15:6379

slots: (0 slots) slave

replicates 2511c99b36e7332e780e1a99d3800b5118cda204

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#容器信息

# redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:201

cluster_stats_messages_pong_sent:202

cluster_stats_messages_sent:403

cluster_stats_messages_ping_received:197

cluster_stats_messages_pong_received:201

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:403

127.0.0.1:6379> cluster nodes

c4b5e4b5bd9bdac83340686aab4caf80412d9adf 192.160.0.16:6379@16379 slave ff3d43f7a35176117f1cf87b8e7bb4293d36cdea 0 1606361107161 2 connected

612a061d020c6cfce795e0caee85f8ada13a1951 192.160.0.14:6379@16379 slave 24b37dae575d40028c7e11b9f2d9d36ba8615ac2 0 1606361109184 3 connected

ff3d43f7a35176117f1cf87b8e7bb4293d36cdea 192.160.0.12:6379@16379 master - 0 1606361109000 2 connected 5461-10922

24b37dae575d40028c7e11b9f2d9d36ba8615ac2 192.160.0.13:6379@16379 master - 0 1606361108000 3 connected 10923-16383

2511c99b36e7332e780e1a99d3800b5118cda204 192.160.0.11:6379@16379 myself,master - 0 1606361108000 1 connected 0-5460

a45877d8533abda256dacd947333b857311e015a 192.160.0.15:6379@16379 slave 2511c99b36e7332e780e1a99d3800b5118cda204 0 1606361108676 1 connected

127.0.0.1:6379> set name jiaopaner

-> Redirected to slot [5798] located at 192.160.0.12:6379

OK

192.160.0.12:6379> get name

"jiaopaner"

springboot docker部署

#Dockerfile

FROM java:8

COPY *.jar /app.jar

CMD ["--server.port=8080"]

EXPOSE 8080

ENTRYPOINT ["java","-jar","/app.jar"]

Compose(编排服务)

Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration.

Using Compose is basically a three-step process:

1.Define your app’s environment with a Dockerfile so it can be reproduced anywhere.

2.Define the services that make up your app in docker-compose.yml so they can be run together in an isolated environment.

3.Run docker-compose up and Compose starts and runs your entire app

A docker-compose.yml looks like this:

version: "3.8"

services:

web:

build: .

ports:

- "5000:5000"

volumes:

- .:/code

- logvolume01:/var/log

links:

- redis

redis:

image: redis

volumes:

logvolume01: {}

Compose安装

$ sudo curl -L "https://github.com/docker/compose/releases/download/1.27.4/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

$ sudo chmod +x /usr/local/bin/docker-compose

$ sudo docker-compose --version

docker-compose version 1.27.4, build 40524192

docker-py version: 4.3.1

CPython version: 3.7.7

OpenSSL version: OpenSSL 1.1.0l 10 Sep 2019

demo

1.create workdir

$ mkdir composetest

$ cd composetest

2.create a file called app.py

import time

import redis

from flask import Flask

app = Flask(__name__)

cache = redis.Redis(host='redis', port=6379)

def get_hit_count():

retries = 5

while True:

try:

return cache.incr('hits')

except redis.exceptions.ConnectionError as exc:

if retries == 0:

raise exc

retries -= 1

time.sleep(0.5)

@app.route('/')

def hello():

count = get_hit_count()

return 'Hello World! I have been seen {} times.\n'.format(count)

3.add requirements.txt file

flask

redis

4.add Dockerfile file

FROM python:3.7-alpine

WORKDIR /code

ENV FLASK_APP=app.py

ENV FLASK_RUN_HOST=0.0.0.0

RUN apk add --no-cache gcc musl-dev linux-headers

COPY requirements.txt requirements.txt

RUN pip install -r requirements.txt

EXPOSE 5000

COPY . .

CMD ["flask", "run"]

5.add docker-compose.yml file

version: "3.8"

services:

web:

build: .

ports:

- "5000:5000"

redis:

image: "redis:alpine"

$ ls

app.py docker-compose.yml Dockerfile requirements.txt

#集群部署:docker stack deploy example --compose-file=docker-compose.yml

#执行 (单机部署)

$ sudo docker-compose up

Creating network "composeset_default" with the default driver

Building web

Step 1/10 : FROM python:3.7-alpine

---> f4bd0adb4b78

Step 2/10 : WORKDIR /code

---> Using cache

---> 6dcde8d2643d

Step 3/10 : ENV FLASK_APP=app.py

---> Using cache

---> f183c013c9c2

Step 4/10 : ENV FLASK_RUN_HOST=0.0.0.0

---> Using cache

---> 00c9ae84df9d

Step 5/10 : RUN apk add --no-cache gcc musl-dev linux-headers

---> Running in 61171d666007

fetch http://dl-cdn.alpinelinux.org/alpine/v3.12/main/x86_64/APKINDEX.tar.gz

fetch http://dl-cdn.alpinelinux.org/alpine/v3.12/community/x86_64/APKINDEX.tar.gz

(1/13) Installing libgcc (9.3.0-r2)

(2/13) Installing libstdc++ (9.3.0-r2)

(3/13) Installing binutils (2.34-r1)

(4/13) Installing gmp (6.2.0-r0)

(5/13) Installing isl (0.18-r0)

(6/13) Installing libgomp (9.3.0-r2)

(7/13) Installing libatomic (9.3.0-r2)

(8/13) Installing libgphobos (9.3.0-r2)

(9/13) Installing mpfr4 (4.0.2-r4)

(10/13) Installing mpc1 (1.1.0-r1)

(11/13) Installing gcc (9.3.0-r2)

(12/13) Installing linux-headers (5.4.5-r1)

(13/13) Installing musl-dev (1.1.24-r10)

Executing busybox-1.31.1-r19.trigger

OK: 153 MiB in 48 packages

Removing intermediate container 61171d666007

---> 2a158f7989b5

Step 6/10 : COPY requirements.txt requirements.txt

---> af6bcc03a33e

Step 7/10 : RUN pip install -r requirements.txt

---> Running in 71fc3d541f23

Collecting flask

Downloading Flask-1.1.2-py2.py3-none-any.whl (94 kB)

Collecting redis

Downloading redis-3.5.3-py2.py3-none-any.whl (72 kB)

Collecting click>=5.1

Downloading click-7.1.2-py2.py3-none-any.whl (82 kB)

Collecting Jinja2>=2.10.1

Downloading Jinja2-2.11.2-py2.py3-none-any.whl (125 kB)

Collecting Werkzeug>=0.15

Downloading Werkzeug-1.0.1-py2.py3-none-any.whl (298 kB)

Collecting itsdangerous>=0.24

Downloading itsdangerous-1.1.0-py2.py3-none-any.whl (16 kB)

Collecting MarkupSafe>=0.23

Downloading MarkupSafe-1.1.1.tar.gz (19 kB)

Building wheels for collected packages: MarkupSafe

Building wheel for MarkupSafe (setup.py): started

Building wheel for MarkupSafe (setup.py): finished with status 'done'

Created wheel for MarkupSafe: filename=MarkupSafe-1.1.1-cp37-cp37m-linux_x86_64.whl size=16913 sha256=05c83f7a4d528469e3601243e13c426f1611ea0634ba8ea024568984d74d1a28

Stored in directory: /root/.cache/pip/wheels/b9/d9/ae/63bf9056b0a22b13ade9f6b9e08187c1bb71c47ef21a8c9924

Successfully built MarkupSafe

Installing collected packages: click, MarkupSafe, Jinja2, Werkzeug, itsdangerous, flask, redis

Successfully installed Jinja2-2.11.2 MarkupSafe-1.1.1 Werkzeug-1.0.1 click-7.1.2 flask-1.1.2 itsdangerous-1.1.0 redis-3.5.3

Removing intermediate container 71fc3d541f23

---> ebd64d838db7

Step 8/10 : EXPOSE 5000

---> Running in a47a4ed55632

Removing intermediate container a47a4ed55632

---> 880f46f3f44c

Step 9/10 : COPY . .

---> 553eb65d995f

Step 10/10 : CMD ["flask", "run"]

---> Running in 1712a24b595c

Removing intermediate container 1712a24b595c

---> 83683540551f

Successfully built 83683540551f

Successfully tagged composeset_web:latest

WARNING: Image for service web was built because it did not already exist. To rebuild this image you must use `docker-compose build` or `docker-compose up --build`.

Pulling redis (redis:alpine)...

alpine: Pulling from library/redis

188c0c94c7c5: Already exists

fb6015f7c791: Pull complete

f8890a096979: Pull complete

cd6e0c12d5bc: Pull complete

67b3665cee45: Pull complete

0705890dd1f7: Pull complete

Digest: sha256:b0e84b6b92149194d99953e44f7d1fa1f470a769529bb05b4164eae60d8aea6c

Status: Downloaded newer image for redis:alpine

Creating composeset_web_1 ... done

Creating composeset_redis_1 ... done

Attaching to composeset_redis_1, composeset_web_1

redis_1 | 1:C 27 Nov 2020 02:56:41.453 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis_1 | 1:C 27 Nov 2020 02:56:41.453 # Redis version=6.0.9, bits=64, commit=00000000, modified=0, pid=1, just started

redis_1 | 1:C 27 Nov 2020 02:56:41.453 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis_1 | 1:M 27 Nov 2020 02:56:41.454 * Running mode=standalone, port=6379.

redis_1 | 1:M 27 Nov 2020 02:56:41.454 # Server initialized

redis_1 | 1:M 27 Nov 2020 02:56:41.454 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect.

redis_1 | 1:M 27 Nov 2020 02:56:41.455 * Ready to accept connections

web_1 | * Serving Flask app "app.py"

web_1 | * Environment: production

web_1 | WARNING: This is a development server. Do not use it in a production deployment.

web_1 | Use a production WSGI server instead.

web_1 | * Debug mode: off

web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

$ curl localhost:5000

Hello World! I have been seen 1 times.

Swarm集群

$ sudo docker swarm --help

Usage: docker swarm COMMAND

Manage Swarm

Commands:

ca Display and rotate the root CA

init Initialize a swarm

join Join a swarm as a node and/or manager

join-token Manage join tokens

leave Leave the swarm

unlock Unlock swarm

unlock-key Manage the unlock key

update Update the swarm

#192.168.144.140:服务器ip地址

$ sudo docker swarm init --advertise-addr 192.168.144.140

Swarm initialized: current node (27zm7bkz3lwi3o5zkpb3o3yyd) is now a manager.

To add a worker to this swarm, run the following command:

#工作节点服务器执行如下命令

docker swarm join --token SWMTKN-1-1zw8g50ugwjyxprtvvlyhw3o3orj2zg2s717e3lc0n5ha80kjg-d6ovnit23xotl9ak27e3dnbgm 192.168.144.140:2377

#管理节点服务器则执行如下命令

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

Nginx集群

$ sudo docker service create -p 8888:80 --name nginx-cluster nginx

#添加副本

$ sudo docker service update replicas 3 nginx-cluster

$ sudo docker service scale nginx-cluster=3

本博客所有文章除特别声明外,均采用 CC BY-SA 3.0协议 。转载请注明出处!